Our publications presented chronologically

2023

-

Navare, U.P., Ciardo, F., Kompatsiari, K., De Tommaso, D., & Wykowska, A. (2023). Neural markers of self-other integration in joint action – why attribution of intentionality matters. https://psyarxiv.com/djbn4

- Marchesi, S., Abubshait, A., Kompatsiari, K., Wu, Y., & Wykowska, A. (2023). Cultural differences in joint attention and engagement in mutual gaze – a dissociation between implicit and explicit measures. https://psyarxiv.com/d4u6c

- Marchesi, S., Kompatsiari, K., De Tommaso, D., & Wykowska, A. (2023). Adopting the intentional stance interferes with social attention when interacting with a social robot. https://psyarxiv.com/mz4rt

- Roselli, C., Marchesi, S., Russi, N. S., De Tommaso, D., & Wykowska, A. (2023). A study on social inclusion of humanoid robots: a novel embodied adaptation of the Cyberball paradigm. https://doi.org/10.31234/osf.io/nbctp

- Roselli, C., Marchesi, S., De Tommaso, D., & Wykowska, A. (2023). The role of prior exposure in the likelihood of adopting the Intentional Stance toward a humanoid robot. Paladyn, Journal of Behavioral Robotics, vol. 14, no. 1, 2023, pp. 20220103. https://doi.org/10.1515/pjbr-2022-0103

- O'Reilly, Z., Roselli, C., Wykowska, A. (2023). Does exposure to technological knowledge modulate the adoption of the intentional stance towards humanoid robots in children? https://psyarxiv.com/cfg4k

- Lombardi, M., Roselli, C., Kompatsiari, K., Rospo, F., Natale, L., & Wykowska, A. (2023). The impact of facial expression and eye contact of a humanoid robot on individual Sense of Agency. https://psyarxiv.com/2pfgh

- Roselli, C., Navare, U., Ciardo, F., & Wykowska, A. (2023). Type of education affects individuals' adoption of Intentional Stance towards robots: an EEG study. https://psyarxiv.com/ntfu3

2022

- Spatola, N., Marchesi, S. & Wykowska, A. (2022). The Phenotypes of Anthropomorphism and the Link to Personality Traits. International Journal of Social Robotics. https://doi.org/10.1007/s12369-022-00939-1

- Navare, U.P., Kompatsiari, K., Ciardo, F., & Wykowska, A. (2022). Task sharing with the humanoid robot iCub increases the likelihood of adopting the intentional stance.1st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), pp. 135-140, https://doi.org/10.1109/RO-MAN53752.2022.9900746

- O'Reilly, Z., Navare, U. P., Marchesi, S., & Wykowska, A. (2022). Does Embodiment and Interaction Affect the Adoption of the Intentional Stance Towards a Humanoid Robot?. International Conference on Social Robotics. http://doi.org/10.31234/osf.io/b8njf

- Parenti, L., Marchesi, S., Belkaid, M., & Wykowska, A. (2022). Attributing intentionality to artificial agents: exposure versus interactive scenarios. International Conference on Social Robotics. http://doi.org/10.31219/osf.io/3zy56

- Parenti, L., Lukomski, A. W., De Tommaso, D., Belkaid, M., & Wykowska, A. (2022). Human-likeness of feedback gestures affects decision processes and subjective trust. International Journal of Social Robotics, 1-9. https://doi.org/10.1007/s12369-022-00927-5

- Spatola, N., Marchesi, S. & Wykowska, A., (2022). Cognitive load affects early processes involved in mentalizing robot behaviour. Nature Scientific Reports, 12:14924 https://doi.org/10.1038/s41598-022-19213-5

- Spatola, N., Marchesi, S., Wykowska, A., (2022). Different models of anthropomorphism across cultures and ontological limits in current frameworks the integrative framework of anthropomorphism. Frontiers in Robotics and AI, 9:863319. http://doi.org/10.3389/frobt.2022.863319

- Roselli, C., Ciardo, F., De Tommaso, D., Wykowska, A., (2022). Human-likeness and attribution of intentionality predict vicarious sense of agency over humanoid robot actions. Nature Scientific Reports, 12:13845. http://doi.org/10.1038/s41598-022018151-6. --> Full paper here.

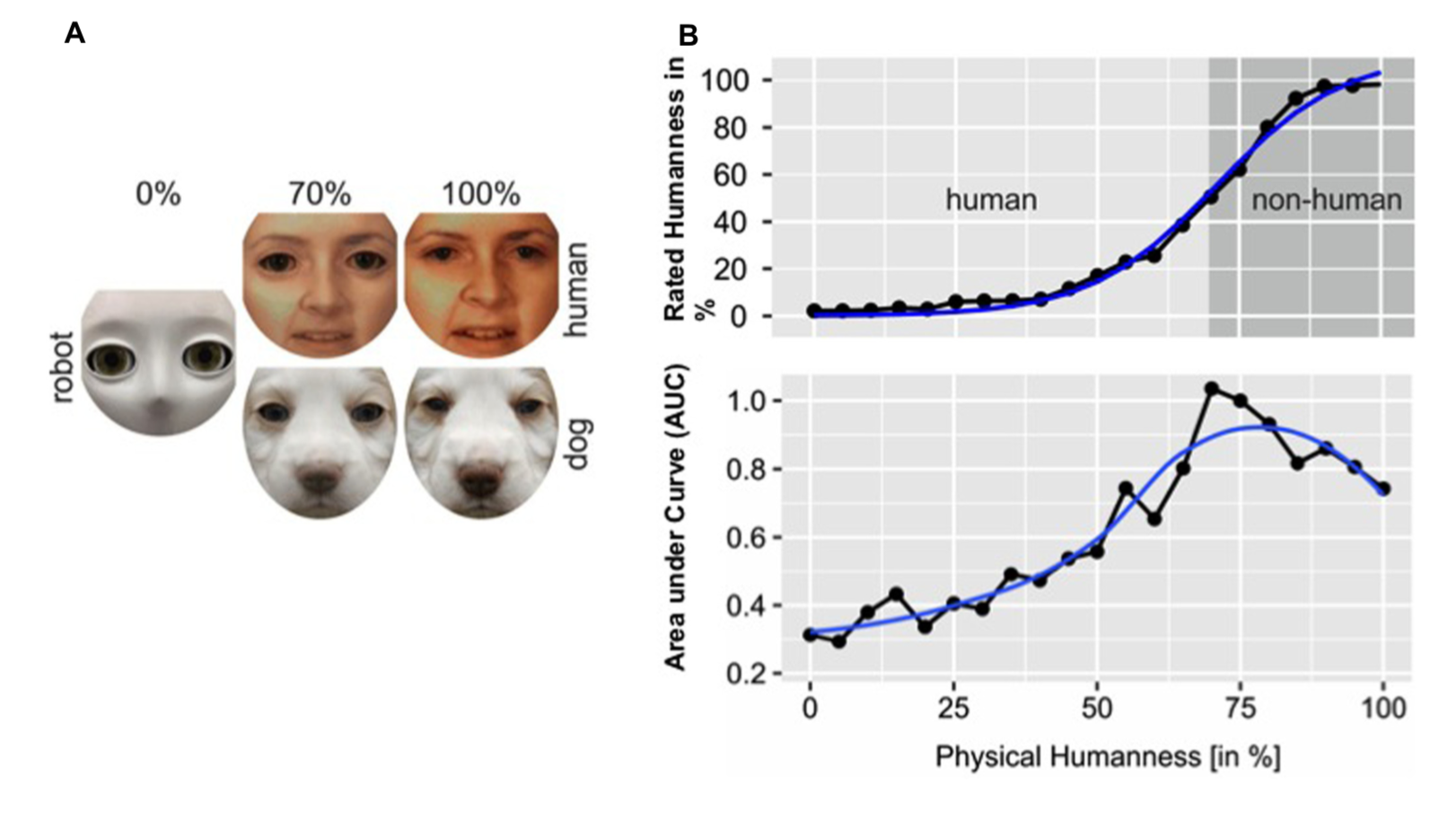

- Ciardo, F., De Tommaso, D., Wykowska, A., (2022). Human-like behavioural variability blurs the distinction between a human and a machine in a nonverbal Turing test. Science Robotics, 7, eabo1241. doi: 10.1126/scirobotics.abo1241. --> Full paper here.

- Lombardi, M., Maiettini, E., De Tommaso, D., Wykowska, A., & Natale, L. (2022). Towards an attentive robotic architecture: learning-based mutual gaze estimation in human-robot interaction. Frontiers in Robotics and AI, 50. https://doi.org/10.3389/frobt.2022.770165

- Marchesi, S., Tommaso, D. D., Perez-Osorio, J., & Wykowska, A. (2022). Belief in Sharing the Same Phenomenological Experience Increases the Likelihood of Adopting the Intentional Stance Toward a Humanoid Robot. Technology, Mind, and Behavior, 3(3). https://doi.org/10.1037/tmb0000072

- Ciardo, F., De Tommaso, D., & Wykowska, A. (2022). Joint action with artificial agents: Human-likeness in behaviour and morphology affects sensorimotor signaling and social inclusion. Computers in Human Behavior, 107237. https://doi.org/10.1016/j.chb.2022.107237

- Ciardo, F., & Wykowska, A. (2022). Robot’s social gaze affects conflict resolution but not conflict adaptations. Journal of Cognition, 5(1). http://doi.org/10.5334/joc.189

- Roselli, C., Ciardo, F., Wykowska, A. (2022). Social inclusion of robots depends on the way a robot is presented to observers. Paladyn. Journal of Behavioral Robotics, 13, 56-66. http://doi.org/10.1515/pjbr-2022-0003

- Abubshait, A., Parenti, L., Perez-Osorio, J., Wykowska, A. (2022). Misleading robot signals in a classification task induce cognitive load as measured by theta synchronization between frontal and temporo-parietal brain regions. Frontiers in Neuroergonomics, 3:838136. https://doi.org/10.3389/fnrgo.2022.838136

- Abubshait, A., Siri, G., & Wykowska, A. (2022). Does attributing mental states to a robot influence accessibility of information represented during reading? Acta Psychologica, 228: 103660. https://doi.org/10.1016/j.actpsy.2022.103660

- Kompatsiari, K., Ciardo, F., Wykowska, A, (2022). To follow or not to follow your gaze: The interplay between strategic control and the eye contact effect on gaze-induced attention orienting. Journal of Experimental Psychology: General. 151: 121-136. https://doi.org/10.1037/xge0001074

- Chevalier, P,. Ghiglino D, Floris F, Priolo T and Wykowska A, (2022) Visual and Hearing Sensitivity Affect Robot-Based Training for Children Diagnosed With Autism Spectrum Disorder. Frontier in Robotics and AI 8:748853. https://doi.org/10.3389/frobt.2021.748853

2021

- Perez-Osorio, J., Abubshait, A., & Wykowska, A. (2021). Irrelevant Robot Signals in a Categorization Task Induce Cognitive Conflict in Performance, Eye Trajectories, the N2 Component of the EEG Signal, and Frontal Theta Oscillations. Journal of cognitive neuroscience, 34(1), 108-126. https://doi.org/10.1162/jocn_a_01786

- Willemse, C., Abubshait, A., & Wykowska, A. (2021). Motor behaviour mimics the gaze response in establishing joint attention, but is moderated by individual differences in adopting the intentional stance towards a robot avatar. Visual Cognition, 1-12. https://doi.org/10.1080/13506285.2021.1994494

- Parenti, L., Marchesi, S., Belkaid, M. and Wykowska, A. (2021). Exposure to Robotic Virtual Agent Affects Adoption of Intentional Stance. In Proceedings of the 9th International Conference on Human-Agent Interaction (HAI '21). Association for Computing Machinery, New York, NY, USA, 348–353. DOI: https://doi.org/10.1145/3472307.3484667

- O’Reilly Z., Ghiglino D., Spatola N., Wykowska A. (2021) Modulating the Intentional Stance: Humanoid Robots, Narrative and Autistic Traits. In: Li H. et al. (eds) Social Robotics. ICSR 2021. Lecture Notes in Computer Science, vol 13086. Springer, Cham. https://doi.org/10.1007/978-3-030-90525-5_61

- Marchesi S., Roselli C., Wykowska A. (2021) Cultural Values, but not Nationality, Predict Social Inclusion of Robots. In: Li H. et al. (eds) Social Robotics. ICSR 2021. Lecture Notes in Computer Science, vol 13086. Springer, Cham. https://doi.org/10.1007/978-3-030-90525-5_5

- Willemse, C. Abubshait, A. & Wykowska, A. (2021) Motor behaviour mimics the gaze response in establishing joint attention, but is moderated by individual differences in adopting the intentional stance towards a robot avatar, Visual Cognition, DOI: 10.1080/13506285.2021.1994494 Accepted preprint: here

- Perez-Osorio, J., Wiese, E., & Wykowska, A. (2021). Theory of Mind and Joint Attention. The Handbook on Socially Interactive Agents: 20 years of Research on Embodied Conversational Agents, Intelligent Virtual Agents, and Social Robotics Volume 1: Methods, Behavior, Cognition (1st ed.). Association for Computing Machinery, New York, NY, USA, 311–348. DOI:https://doi.org/10.1145/3477322.3477332

- Spatola, N., Marchesi, S. & Wykowska, A. (2021). The Intentional Stance Test-2: How to Measure the Tendency to Adopt Intentional Stance Towards Robots. Frontiers in Robotics and AI. 8:666586. doi: 10.3389/frobt.2021.666586

- Abubshait, A., Perez-Osorio, J., Tommaso, D. D. and Wykowska, A. (2021). Collaboratively framed interactions increase the adoption of intentional stance towards robots. In 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), pp. 886-891, doi: 10.1109/RO-MAN50785.2021.9515515.

-

Ciardo, F., Tommaso D.D. & Wykowska, A. (2021). Effects of erring behavior in a human-robot joint musical task on adopting Intentional Stance toward the iCub robot. In 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), 2021, pp. 698-703, doi: 10.1109/RO-MAN50785.2021.9515434.

- Roselli, C. Ciardo, F. & Wykowska, A. (2021). Intentions with actions: The role of intentionality attribution on the vicarious sense of agency in Human–Robot interaction. Quarterly Journal of Experimental

Psychology, 1:17. DOI: https://doi.org/10.1177/17470218211042003

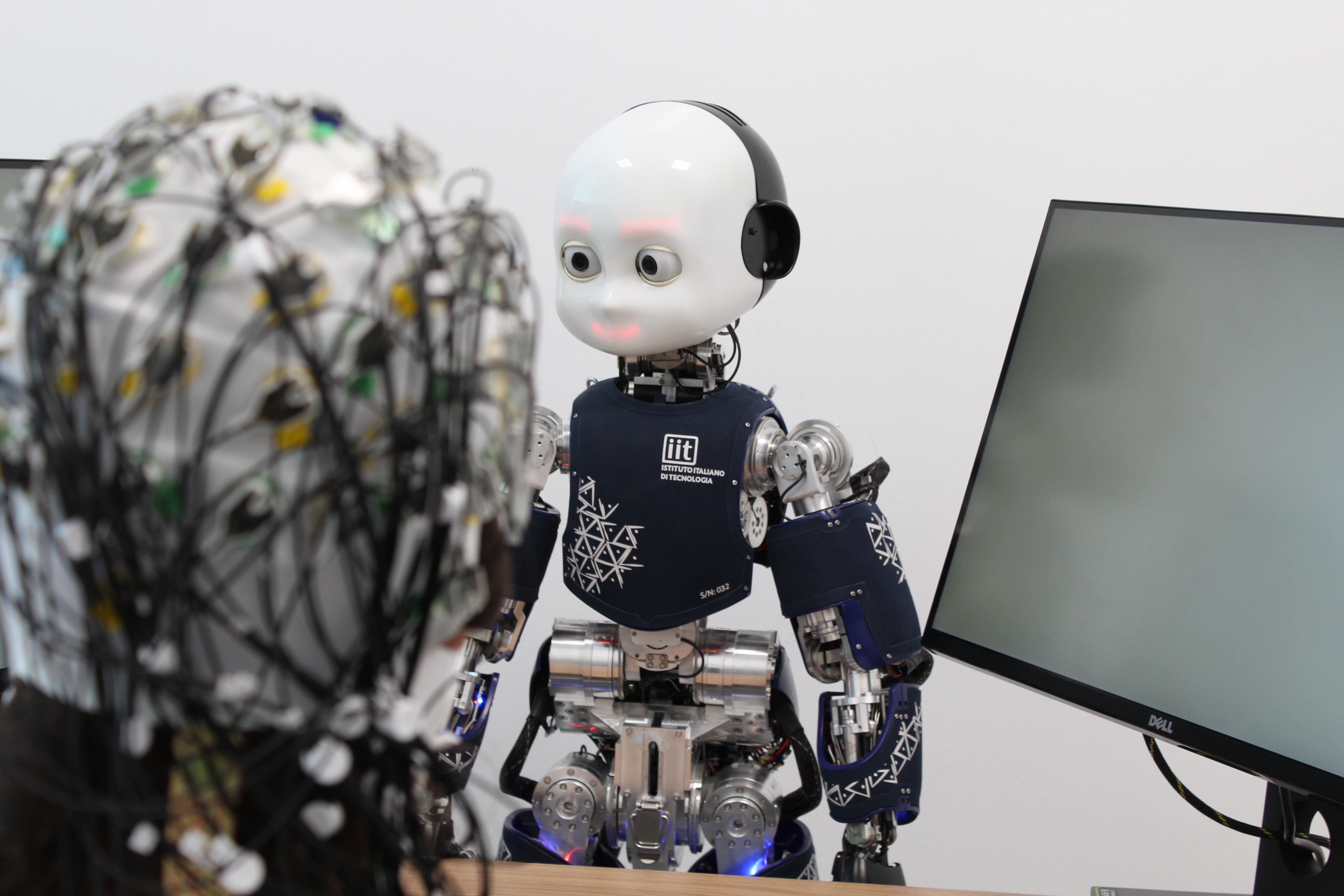

- Belkaid, M., Kompatsiari, K., De Tommaso, D., Zablith, I., & Wykowska, A. (2021). Mutual gaze with a robot affects human neural activity and delays decision-making processes. Science Robotics, 6(58), eabc5044. DOI: 10.1126/scirobotics.abc5044 --> Full paper here.

- Marchesi, S., Bossi, F., Ghiglino, D., De Tommaso, D. & Wykowska, A. (2021). I Am Looking for Your Mind: Pupil Dilation Predicts Individual Differences in Sensitivity to Hints of Human-Likeness in Robot Behavior. Frontier in Robotics and AI, 8:653537. doi: 10.3389/frobt.2021.653537

- Ghiglino, D., Willemse, C., De Tommaso, D. & Wykowska, A. (2021) Mind the Eyes: Artificial Agents’ Eye Movements Modulate Attentional Engagement and Anthropomorphic Attribution. Frontier in Robotics and AI, 8:642796. doi: 10.3389/frobt.2021.642796

- Schellen, E., Bossi, F. & Wykowska, A. (2021). Robot Gaze Behavior Affects Honesty in Human-Robot Interaction. Frontiers in Artificial Intelligence. 4:663190. doi: 10.3389/frai.2021.663190

- Spatola, N. & Wykowska, A. (2021). The personality of anthropomorphism: How the need for cognition and the need for closure define attitudes and anthropomorphic attributions toward robots. Computers in Human Behavior, 122:106841, ISSN 0747-5632, https://doi.org/10.1016/j.chb.2021.106841

- Chevalier, P., Vasco, V., Willemse, C., De Tommaso, D., Tikhanoff, V., Pattacini, U. & Wykowska, A. (2021). Upper limb exercise with physical and virtual robots: Visual sensitivity affects task performance. Paladyn, Journal of Behavioral Robotics, vol. 12, no. 1, pp. 199-213. https://doi.org/10.1515/pjbr-2021-0014

- Kompatsiari, K., Bossi, F., & Wykowska, A. (2021). Eye contact during joint attention with a humanoid robot modulates oscillatory brain activity. Social Cognitive and Affective Neuroscience.

https://doi.org/10.1093/scan/nsab001 - Marchesi, S., Spatola, N., Perez-Osorio, J., & Wykowska, A. (2021). Human vs Humanoid: A behavioral investigation of the individual tendency to adopt the intentional stance. HRI '21: Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction. March 2021, Pages 332–340 https://doi.org/10.1145/3434073.3444663 Preprint: here

-

Wykowska, A. (2021). Robots as mirrors of the human mind. Current Directions in Psychological Science. https://doi.org/10.1177/0963721420978609 Full e-print here

- Hinz, N, Ciardo, F., & Wykowska, A. (2021) ERP markers of action planning and outcome monitoring in human–robot interaction, Acta Psychologica, Vol. 212. https://doi.org/10.1016/j.actpsy.2020.103216.

2020

-

Abubshait, A. & Wykowska, A. (2020) Repetitive Robot Behavior Impacts Perception of Intentionality and Gaze-Related Attentional Orienting. Frontiers in Robotics and AI 7:565825. doi: 10.3389/frobt.2020.565825

- Ciardo F., Ghiglino D., Roselli C., & Wykowska A. (2020) The Effect of Individual Differences and Repetitive Interactions on Explicit and Implicit Attitudes Towards Robots. In: Wagner A.R. et al. (eds) Social Robotics. ICSR 2020. Lecture Notes in Computer Science, vol 12483. Springer, Cham. http://doi-org-443.webvpn.fjmu.edu.cn/10.1007/978-3-030-62056-1_39

- Bossi, F., Willemse, C., Cavazza, J., Marchesi, S., Murino, V., & Wykowska, A. (2020) The human brain reveals resting-state activity patterns that are predictive of biases in attitudes toward robots. Science Robotics. Vol.5, Issue 46. doi: 10.1126/scirobotics.abb6652. Full text here.

- Ghiglino, D. & Wykowska, A. (2020) When Robots (pretend to) Think. In Artificial Intelligence:

Reflections in Philosophy, Theology, and the Social Sciences. Goecke P.B., and Rosenthal-von der Pütten, A. M. (Eds). Mentis. https://doi.org/10.30965/9783957437488_006 - Wykowska, A. (2020) Social Robots to Test Flexibility of Human Social Cognition. International Journal of Social Robotics. https://doi.org/10.1007/s12369-020-00674-5

- Marchesi, S., Perez-Osorio, J., De Tommaso, D., & Wykowska, A. (2020) Don’t overthink: fast decision making combined with behavior variability perceived as more human-like. 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 2020, pp. 54-59, doi: 10.1109/RO-MAN47096.2020.9223522. Preprint: https://psyarxiv.com/vz2pt/

- Ghiglino, D., De Tommaso, D., Willemse, C., Marchesi, S., & Wykowska, A. (accepted) Can I get your (robot) attention? Human sensitivity to subtle hints of human-likeness in a humanoid robot’s behavior. Conference CogSci 2020. Preprint: https://psyarxiv.com/kfy4g

- Ciardo, F. & Wykowska, A. (2020) Social assistive robotics as a tool to enhance socio-cognitive development: Benefits, limits, and future directions, in "Sistemi intelligenti, Rivista quadrimestrale di scienze cognitive e di intelligenza artificiale" 1/2020, pp. 9-25, doi: 10.1422/96277

- Ghiglino, D., Willemse, C., De Tommaso, D., Bossi, F. & Wykowska, A. (2020). At first sight: robots’ subtle eye movement parameters affect human attentional engagement, spontaneous attunement and perceived human-likeness. Paladyn, Journal of Behavioral Robotics. https://doi.org/10.1515/pjbr-2020-0004

- Perez-Osorio, J. & Wykowska, A. (2020) Adopting the intentional stance toward natural and artificial agents. Philosophical Psychology. https://doi.org/10.1080/09515089.2019.1688778

Preprint: https://psyarxiv.com/t7dwg/ - Chevalier, P., Kompatsiari. K., Ciardo, F., Wykowska, A. (2020). Examining joint attention with the use of humanoid robots – a new approach to study fundamental mechanisms of social cognition. Psychonomic Bulletin & Review, 27, 217–236. https://doi.org/10.3758/s13423-019-01689-4

- Ciardo, F., Beyer, F. De Tommaso, D., & Wykowska, A. (2020). Attribution of intentional agency towards robots reduces one's own sense of agency. Cognition. https://doi.org/10.1016/j.cognition.2019.104109

2019

- Chevalier, P., Kompatsiari, K., Ciardo, F., & Wykowska, A. (accepted). Examining joint attention with the use of humanoid robots - a new approach to study fundamental mechanisms of social cognition. Psychonomic Bulletin & Review.

- Hinz, N.-A., Ciardo, F., Wykowska, A. (2019). Individual differences in attitude toward robots predict behavior in human-robot interaction. In: Salichs M. et al. (eds) Social Robotics. ICSR 2019. Lecture Notes in Computer Science, vol 11876. Springer, Cham. https://doi.org/10.1007/978-3-030-35888-4_7

- Perez-Osorio J., Marchesi S., Ghiglino D., Ince M., Wykowska A. (2019) More Than You Expect: Priors Influence on the Adoption of Intentional Stance Toward Humanoid Robots. In: Salichs M. et al. (eds) Social Robotics. ICSR 2019. Lecture Notes in Computer Science, vol 11876. Springer, Cham. https://doi.org/10.1007/978-3-030-35888-4_12

- Roselli, C., Ciardo, F., Wykowska, A. (2019). Robots improve judgments on self-generated actions: an Intentional Binding Study. In: Salichs M. et al. (eds) Social Robotics. ICSR 2019. Lecture Notes in Computer Science, vol 11876. Springer, Cham. https://doi.org/10.1007/978-3-030-35888-4_9

- Kompatsiari, K., Ciardo, F., De Tommaso, D., Wykowska, A. (accepted). Measuring engagement elicited by eye contact in Human-Robot Interaction. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China

- Perez-Osorio, J., & Wykowska, A. (2019) Adopting the Intentional Stance Towards Humanoid Robots. In: Laumond JP., Danblon E., Pieters C. (eds) Wording Robotics. Springer Tracts in Advanced Robotics, vol 130. Springer, Cham. doi: https://doi.org/10.1007/978-3-030-17974-8_10

- De Tommaso, D., & Wykowska, A. (2019). TobiiGlassesPySuite: an open-source suite for using the Tobii Pro Glasses 2 in eye-tracking studies. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications (ETRA '19). ACM, New York, NY, USA, Article 46, 5 pages. DOI: https://doi.org/10.1145/3314111.3319828

- Kompatsiari, K., Ciardo, F., Tikhanoff, V., Metta, G., & Wykowska, A. (2019) It’s in the eyes: The engaging role of eye contact in HRI. International Journal of Social Robotics. https://doi.org/10.1007/s12369-019-00565-4

- Ciardo, F., De Tommaso, D., & Wykowska, A. (2019). Humans socially attune to their “follower” robot. ACM/IEEE International Conference on Human-Robot Interaction, HRI 2019, Daegu, Korea. doi: https://doi.org/10.1109/HRI.2019.8673262 Preprint: pdf

- Marchesi, S., Ghiglino, D., Ciardo, F., Perez-Osorio, J., Baykara, E., & Wykowska, A. (2019) Do we adopt the Intentional Stance toward humanoid robots? Frontiers in Psychology. doi: https://doi.org/10.3389/fpsyg.2019.00450

- Willemse C., & Wykowska A. (2019). In natural interaction with embodied robots we prefer it when they follow our gaze: a gaze-contingent mobile eye-tracking study. Philosophical Transactions of the Royal Society B: Biological Sciences. doi: https://doi.org/10.1098/rstb.2018.0024

- Cross A., Hortensius R., & Wykowska A. (2019). From social brains to social robots: Applying neurocognitive insights to human-robot interaction. Philosophical Transactions of the Royal Society B: Biological Sciences. doi: https://doi.org/10.1098/rstb.2018.0036

- Schellen, E. & Wykowska, A. (2019). Intentional Mindset Toward Robots—Open Questions and Methodological Challenges. Frontiers in Robotics and AI, 5:139. doi: 10.3389/frobt.2018.00139

2018

Journal papers

- Kompatsiari, K., Ciardo, F., Tikhanoff, V., Metta, G., & Wykowska, A. (2018). On the role of eye contact in gaze cueing. Scientific Reports, 8(1), 17842. https://doi.org/10.1038/s41598-018-36136-2

- Ciardo, F., & Wykowska, A. (2018). Response Coordination Emerges in Cooperative but Not Competitive Joint Task. Frontiers in Psychology, 9:1919. doi: 10.3389/fpsyg.2018.01919

- Willemse, C., Marchesi, S., & Wykowska, A. (2018). Robot Faces that Follow Gaze Facilitate Attentional Engagement and Increase Their Likeability. Frontiers in Psychology, 9:70. doi: 10.3389/fpsyg.2018.00070

- Havlicek, O., Mueller, H., & Wykowska, A. (2018). Distract yourself: prediction of salient distractors by own actions and external cues. Psychological Research. doi: https://doi.org/10.1007/s00426-018-1129-x

Conference papers

- Ciardo, F., De Tommaso, D., Beyer, F., & Wykowska, A. (2018). Reduced sense of agency in human-robot interaction. In Proceedings of the International Conference for Social Robotics (ICSR), 2018, Qingdao, China. Preprint doi: 10.31234/osf.io/8bka3

- Ghiglino, D., De Tommaso, D., & Wykowska, A. (2018). Attributing human-likeness to an avatar: the role of time and space in the perception of biological motion. In Proceedings of the International Conference for Social Robotics (ICSR), 2018, Qingdao, China.

Preprint doi: 10.31234/osf.io/7qm3g - Kompatsiari, K., Perez-Osorio, J., De Tommaso, D., Metta, G., & Wykowska, A. (2018). Neuroscientifically-grounded research for improved human-robot interaction. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, 6 Pages. doi: 10.1109/IROS.2018.8594441

- Perez-Osorio, J., De Tommaso D., Baykara, E., & Wykowska, A. (2018). Joint action with iCub: a successful adaptation of a paradigm of cognitive neuroscience to HRI. In Proceedings of the 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing - Tai'an, 6 Pages. doi: 10.1109/ROMAN.2018.8525536

- Wykowska, A., Metta, G., Becchio, C., Hortensius, R. & Cross, E. (2018). Cognitive and social neuroscience methods for HRI. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, March 2018 (HRI '18 Companion), 2 pages. doi: 10.1145/3173386.3173563

Other: - Schellen, E., Perez-Osorio, J., & Wykowska, A. (2018). Social Cognition in Human-Robot Interaction: Putting the ‘H’ back in ‘HRI’. ERCIM News 114, Special theme: Human-Robot Interaction. (Eds) Serena Ivaldi and Maria Pateraki. Online: https://ercim-news.ercim.eu/en114/special/social-cognition-in-human-robot-interaction-putting-the-h-back-in-hri [full issue in pdf]

2017

- Natale, L., Bartolozzi, C., Pucci, D., Wykowska, A., & Metta, G. (2017). The not-yet-finished story of building a robot child. Science Robotics, Vol. 2, Issue 13, eaaq1026 DOI: 10.1126/scirobotics.aaq1026

- Kompatsiari K., Tikhanoff V., Ciardo F., Metta G., & Wykowska A. (2017). The Importance of Mutual Gaze in Human-Robot Interaction. In: Kheddar A. et al. (eds) Social Robotics. ICSR 2017. Lecture Notes in Computer Science, vol 10652, Springer, 443-452. DOI: doi.org/10.1007/978-3-319-70022-9_44 [Full manuscript]

- Wiese, E., Metta, G., & Wykowska, A. (2017). Robots as Intentional Agents: Using neuroscientific methods to make robots appear more social. Frontiers in Psychology, 8:1663, DOI: 10.3389/fpsyg.2017.01663

2018

Neuroscientifically-Grounded Research for Improved Human-Robot Interaction

ABSTRACT

The present study highlights the benefits of using well-controlled experimental designs, grounded in experimental psychology research and objective neuroscientific methods, for generating progress in human-robot interaction (HRI) research. More specifically, we aimed at implementing a well-studied paradigm of attentional cueing through gaze (the so-called “joint attention” or “gaze cueing”) in an HRI protocol involving the iCub robot. Similarly to documented results in gaze-cueing research, we found faster response times and enhanced event-related potentials of the EEG signal for discrimination of cued, relative to uncued, targets. These results are informative for the robotics community by showing that a humanoid robot with mechanistic eyes and human-like characteristics of the face is in fact capable of engaging a human in joint attention to a similar extent as another human would do. More generally, we propose that the methodology of combining neuroscience methods with an HRI protocol, contributes to understanding mechanisms of human social cognition in interactions with robots and to improving robot design, thanks to systematic and well-controlled experimentation tapping onto specific cognitive mechanisms of the human, such as joint attention.

-

CitationKompatsiari, K., Perez-Osorio, J., De Tommaso, D., Metta, G., & Wykowska, A. (2018). Neuroscientifically-grounded research for improved human-robot interaction. 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, 6 Pages. doi: 10.1109/IROS.2018.8594441

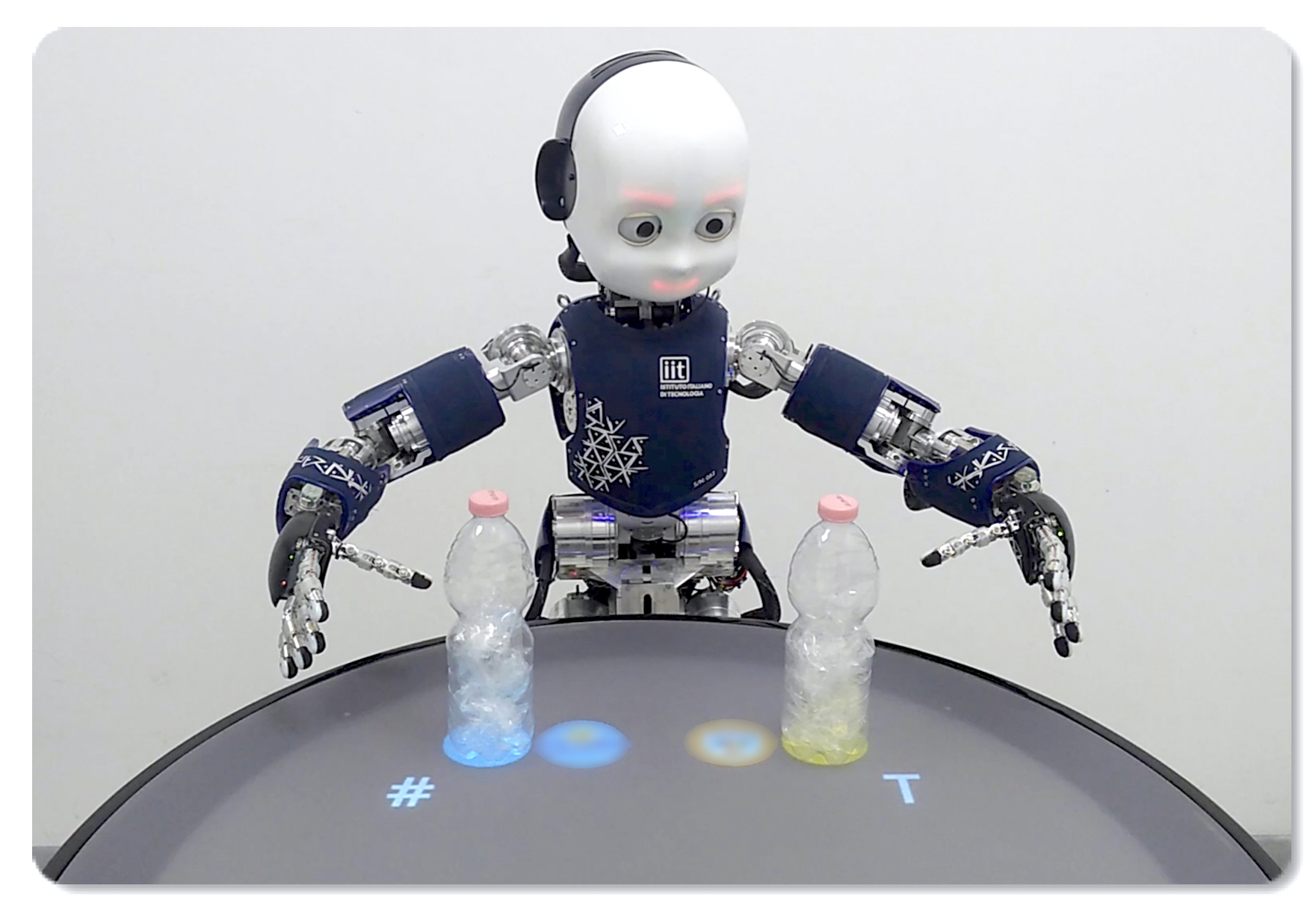

Joint action with iCub: a successful adaptation of a paradigm of cognitive neuroscience to HRI

ABSTRACT

Robots will soon enter social environments shared with humans. We need robots that are able to efficiently convey social signals during interactions. At the same time, we need to understand the impact of robots' behavior on the human brain. For this purpose, human behavioral and neural responses to the robot behavior should be quantified offering feedback on how to improve and adjust robot behavior. Under this premise, our approach is to use methods of experimental psychology and cognitive neuroscience to assess the human's reception of a robot in human-robot interaction protocols. As an example of this approach, we report an adaptation of a classical paradigm of experimental cognitive psychology to a naturalistic human-robot interaction scenario. We show the feasibility of such an approach with a validation pilot study, which demonstrated that our design yielded a similar pattern of data to what has been previously observed in experiments within the area of cognitive psychology. Our approach allows for addressing specific mechanisms of human cognition that are elicited during human-robot interaction, and thereby, in a longer-term perspective, it will allow for designing robots that are well-attuned to the workings of the human brain.

-

CitationPerez-Osorio, J., De Tommaso D., Baykara, E., & Wykowska, A. (2018). Joint action with iCub: a successful adaptation of a paradigm of cognitive neuroscience to HRI. 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing - Tai'an, 6 Pages. doi: 10.1109/ROMAN.2018.8525536

Social Cognition in Human-Robot Interaction: Putting the ‘H’ back in ‘HRI’

ABSTRACT

The Social Cognition in Human-Robot Interaction (S4HRI) research line at the Istituto Italiano di Tecnologia (IIT) applies methods from experimental psychology and cognitive neuroscience to human-robot interaction studies. With this approach, we maintain excellent experimental control, without losing ecological validity and generalisability, and thus we can provide reliable results informing about robot design that best evokes mechanisms of social cognition in the human interaction partner.

-

CitationSchellen, E., Pérez-Osorio, J., & Wykowska, A. (2018). Social Cognition in Human-Robot Interaction: Putting the ‘H’ back in ‘HRI’. ERCIM News 114, Special theme: Human-Robot Interaction. (Eds) Serena Ivaldi and Maria Pateraki. Online: https://ercim-news.ercim.eu/en114/special/social-cognition-in-human-robot-interaction-putting-the-h-back-in-hri [full issue in pdf file]

Cognitive and social neuroscience methods for HRI

ABSTRACT

This workshop focuses on research in HRI using objective measures from social and cognitive neuroscience to provide guidelines for the design of robots well-tailored to the workings of the human brain. The aim is to present results from experimental studies in which human behavior and brain activity are measured during interactive protocols with robots. Discussion will focus on means to improve replicability and generalizability of experimental results in HRI.

-

CitationWykowska, A., Metta, G., Becchio, C., Hortensius, R. & Cross, E. (2018). Cognitive and social neuroscience methods for HRI. In Proceedings of ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, March 2018 (HRI '18 Companion), 2 pages. DOI: 10.1145/3173386.3173563

Robot Faces that Follow Gaze Facilitate Attentional Engagement and Increase Their Likeability

ABSTRACT

Gaze behavior of humanoid robots is an efficient mechanism for cueing our spatial orienting, but less is known about the cognitive–affective consequences of robots responding to human directional cues. Here, we examined how the extent to which a humanoid robot (iCub) avatar directed its gaze to the same objects as our participants affected engagement with the robot, subsequent gaze-cueing, and subjective ratings of the robot’s characteristic traits. In a gaze-contingent eyetracking task, participants were asked to indicate a preference for one of two objects with their gaze while an iCub avatar was presented between the object photographs. In one condition, the iCub then shifted its gaze toward the object chosen by a participant in 80% of the trials (joint condition) and in the other condition it looked at the opposite object 80% of the time (disjoint condition). Based on the literature in human–human social cognition, we took the speed with which the participants looked back at the robot as a measure of facilitated reorienting and robot-preference, and found these return saccade onset times to be quicker in the joint condition than in the disjoint condition. As indicated by results from a subsequent gaze-cueing tasks, the gaze-following behavior of the robot had little effect on how our participants responded to gaze cues. Nevertheless, subjective reports suggested that our participants preferred the iCub following participants’ gaze to the one with a disjoint attention behavior, rated it as more human-like and as more likeable. Taken together, our findings show a preference for robots who follow our gaze. Importantly, such subtle differences in gaze behavior are sufficient to influence our perception of humanoid agents, which clearly provides hints about the design of behavioral characteristics of humanoid robots in more naturalistic settings.

-

Citation

Willemse, C., Marchesi, S., & Wykowska, A. (2018). Robot Faces that Follow Gaze Facilitate Attentional Engagement and Increase Their Likeability. Frontiers in Psychology, 9:70. doi: 10.3389/fpsyg.2018.00070

2017

The not-yet-finished story of building a robot child

ABSTRACT

The iCub open-source humanoid robot child is a successful initiative supporting research in embodied artificial intelligence.

-

Citation

Natale, L., Bartolozzi, C., Pucci, D., Wykowska, A., & Metta, G. (2017). The not-yet-finished story of building a robot child. Science Robotics, Vol. 2, Issue 13, eaaq1026 DOI: 10.1126/scirobotics.aaq1026

The Importance of Mutual Gaze in Human-Robot Interaction

ABSTRACT

Mutual gaze is a key element of human development, and constitutes an important factor in human interactions. In this study, we examined –through analysis of subjective reports– the influence of an online eye-contact of a humanoid robot on humans’ reception of the robot. To this end, we manipulated the robot gaze, i.e., mutual (social) gaze and neutral (non-social) gaze, throughout an experiment involving letter identification. Our results suggest that people are sensitive to the mutual gaze of an artificial agent, they feel more engaged with the robot when a mutual gaze is established, and eye-contact supports attributing human-like characteristics to the robot. These findings are relevant both to the human-robot interaction (HRI) research - enhancing social behavior of robots, and also for cognitive neuroscience - studying mechanisms of social cognition in relatively realistic social interactive scenarios.

-

Citation

Kompatsiari K., Tikhanoff V., Ciardo F., Metta G., & Wykowska A. (2017). The Importance of Mutual Gaze in Human-Robot Interaction. In: Kheddar A. et al. (eds) Social Robotics. ICSR 2017. Lecture Notes in Computer Science, vol 10652, Springer, 443-452. DOI: doi.org/10.1007/978-3-319-70022-9_44

Robots as Intentional Agents: Using neuroscientific methods to make robots appear more social

ABSTRACT

Robots are increasingly envisaged as our future cohabitants. However, while considerable progress has been made in recent years in terms of their technological realization, the ability of robots to interact with humans in an intuitive and social way is still quite limited. An important challenge for social robotics is to determine how to design robots that can perceive the user’s needs, feelings, and intentions, and adapt to users over a broad range of cognitive abilities. It is conceivable that if robots were able to adequately demonstrate these skills, humans would eventually accept them as social companions. We argue that the best way to achieve this is using a systematic experimental approach based on behavioral and physiological neuroscience methods such as motion/eye-tracking, electroencephalography, or functional near-infrared spectroscopy embedded in interactive human–robot paradigms. This approach requires understanding how humans interact with each other, how they perform tasks together and how they develop feelings of social connection over time, and using these insights to formulate design principles that make social robots attuned to the workings of the human brain. In this review, we put forward the argument that the likelihood of artificial agents being perceived as social companions can be increased by designing them in a way that they are perceived as intentional agents that activate areas in the human brain involved in social-cognitive processing. We first review literature related to social-cognitive processes and mechanisms involved in human–human interactions, and highlight the importance of perceiving others as intentional agents to activate these social brain areas. We then discuss how attribution of intentionality can positively affect human–robot interaction by (a) fostering feelings of social connection, empathy and prosociality, and by (b) enhancing performance on joint human–robot tasks. Lastly, we describe circumstances under which attribution of intentionality to robot agents might be disadvantageous, and discuss challenges associated with designing social robots that are inspired by neuroscientific principles.

-

Citation

Wiese, E., Metta, G., & Wykowska, A. (2017). Robots as Intentional Agents: Using neuroscientific methods to make robots appear more social. Frontiers in Psychology, 8:1663, DOI: 10.3389/fpsyg.2017.01663