InStance

Intentional Stance for social attunement

Prof. Agnieszka Wykowska explains, in a nutshell, the background and aims of the ERC project.

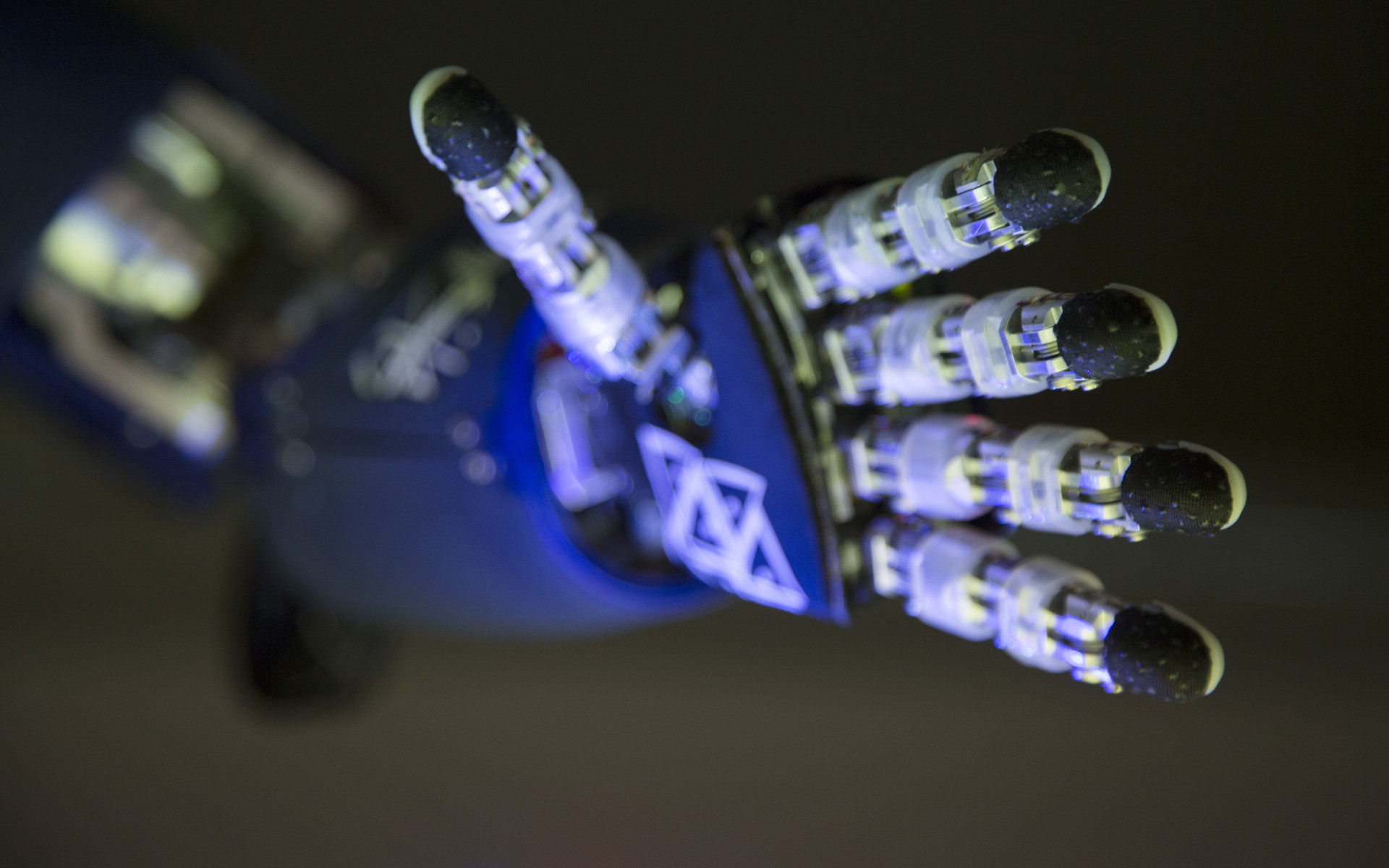

However, for non-intentional systems (such as human-made artifacts), we achieve the most efficient predictions adopting the Design Stance. Using such strategy, we assume that the system would respond according to the purpose it was designed for (i.e., pushing the brakes will reduce the speed of the car). Adopting either the intentional stance or the design stance has profound consequences not only on the type of predictions we create but also on the chances of becoming engaged in a social situation. For example, when I see somebody is pointing, I assume that such behavior has an intention (i.e., I adopt the intentional stance Then, I direct my attention to that location, and we establish a joint focus of attention, thereby becoming socially attuned. On the contrary, if I see that a machine’s artificial arm is pointing somewhere, I might be unwilling to attend there, as I do not believe that the machine wants to show me something, i.e., there is no intentional communicative content in the gesture.

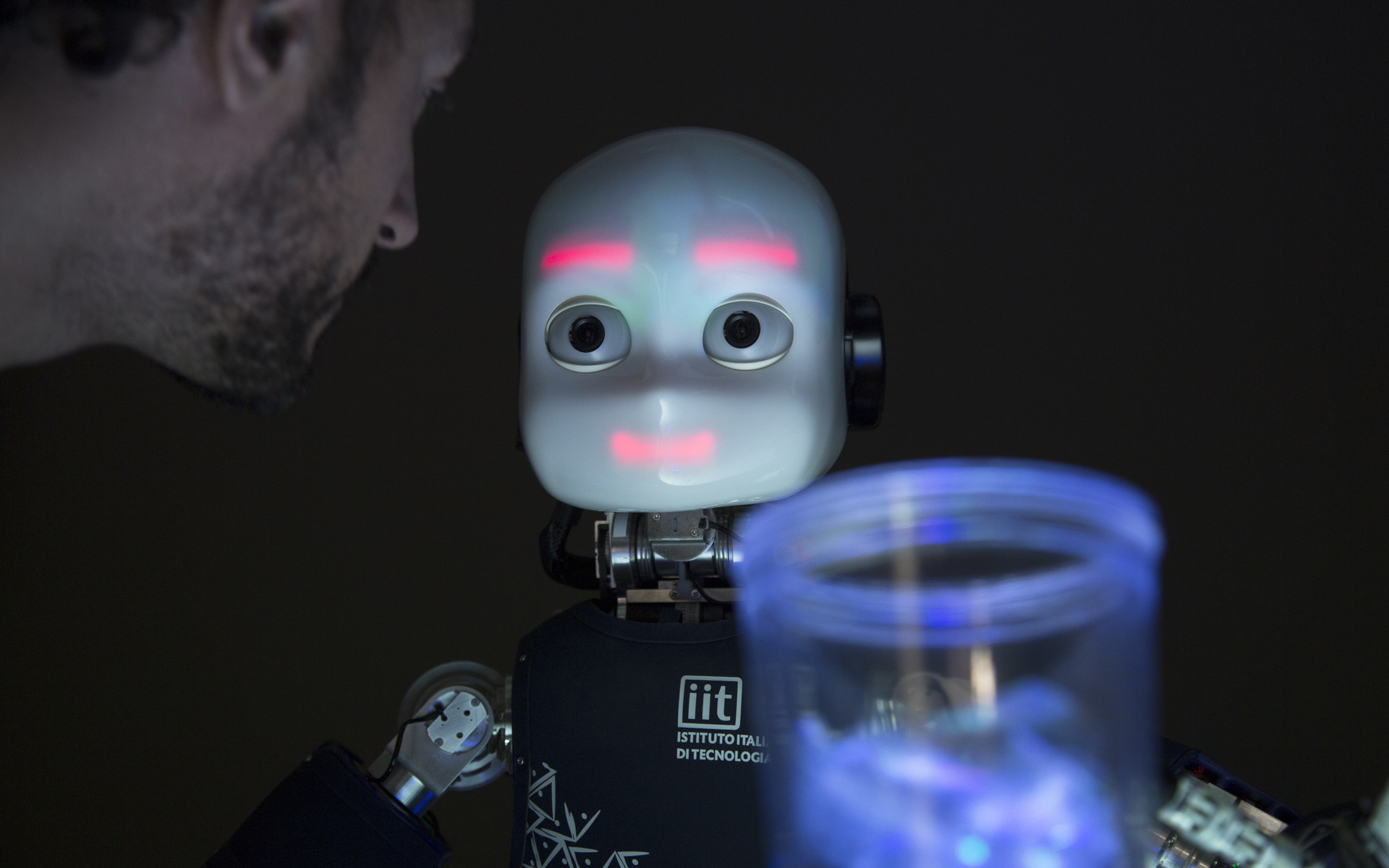

This raises the question: to what extent are humans ready to attune socially with artificial systems that have human-like appearance, such as humanoid robots? It might be that once a robot imitates human-like behaviour at the level of subtle (and often implicit) social signals, humans might automatically perceive its behaviour as reflecting mental states. This will presumably evoke social cognition mechanisms to the same (or similar) extent as in human-human interactions, allowing social attunement.

Let's Talk! An interview with Agnieszka Wykowska, ERC winner